ECHELON Reservoir Simulation Software: Billion Cell Calculation

Today, in a joint press release with IBM, Stone Ridge Technology announced the first billion cell reservoir simulation completed entirely on GPUs by ECHELON software.

Today, in a joint press release with IBM, Stone Ridge Technology announced the first billion cell reservoir simulation completed entirely on GPUs by ECHELON software.

Posted in: ECHELON Software

The football field vs. the ping-pong table

In a joint press release with IBM today, we announced the first billion cell engineering simulation completed entirely on GPUs. The calculation and the results that follow powerfully illustrate i) the capability of GPUs for large scale physical modeling ii) the performance advantages of GPUs over CPUs and iii) the efficiency and density of solution that GPUs offer. In this blog post I’ll present details on our calculation and an analysis of the results.

We had been thinking about using ECHELON software, our high-performance petroleum reservoir simulator, to perform a "hero-scale" billion-cell calculation for some time to promote the software's capabilities. ECHELON software is unique in that it is the only reservoir simulator (perhaps the only engineering simulator) that runs all numerical calculations on GPUs. Marathon Oil, an early partner in the development of ECHELON software, has been using it for two years on their asset portfolio. While the billion-cell mark has been breached before it’s not been tried on the GPU platform. The recent announcement of a billion-cell calculation by ExxonMobil using the Blue Water facility at NCSA spurred us forward. In part, we were curious how ECHELON software would perform at this scale but we also thought it would highlight the capabilities of GPUs for engineering modeling and provide a vehicle to promote the differences between GPU and CPU simulation.

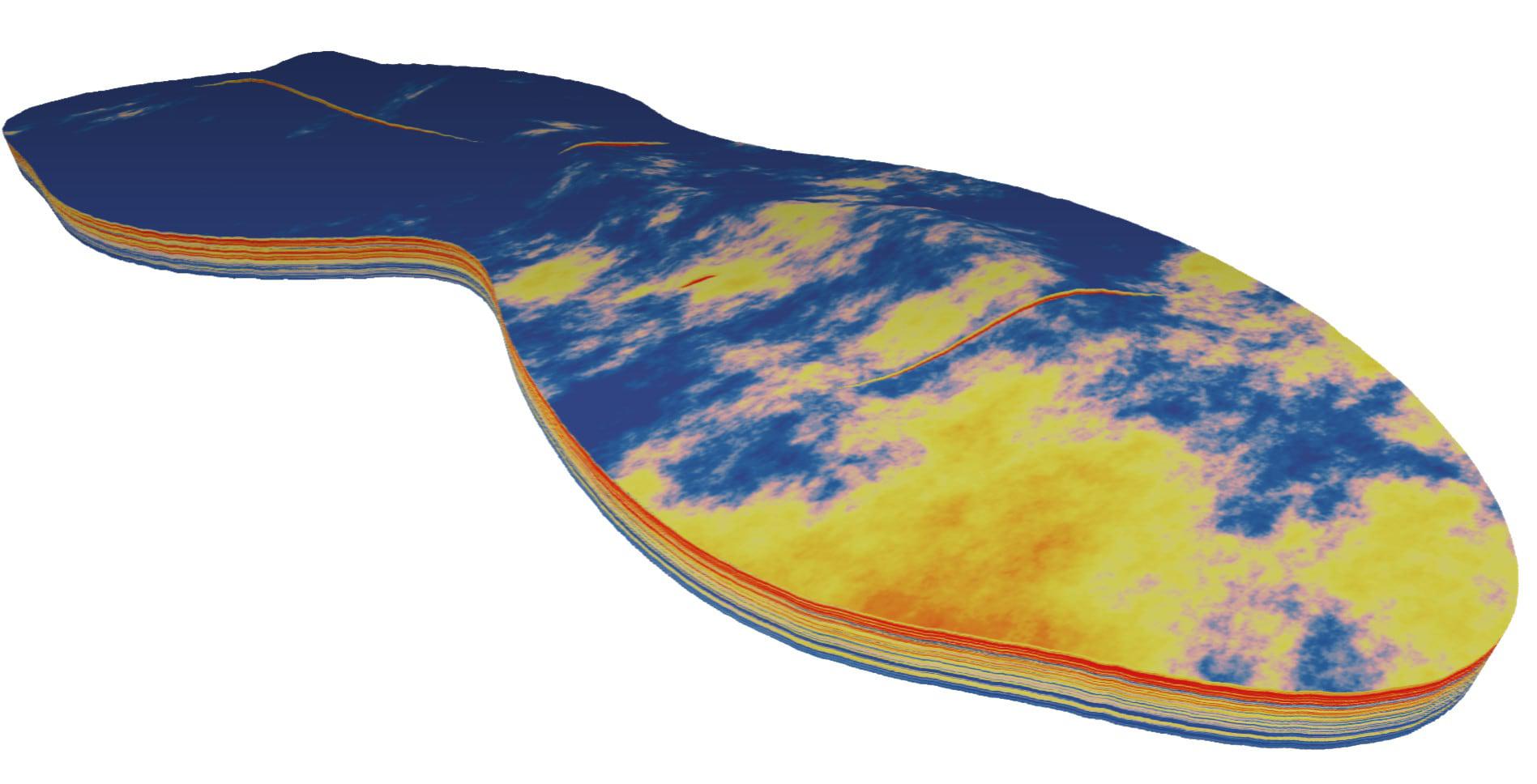

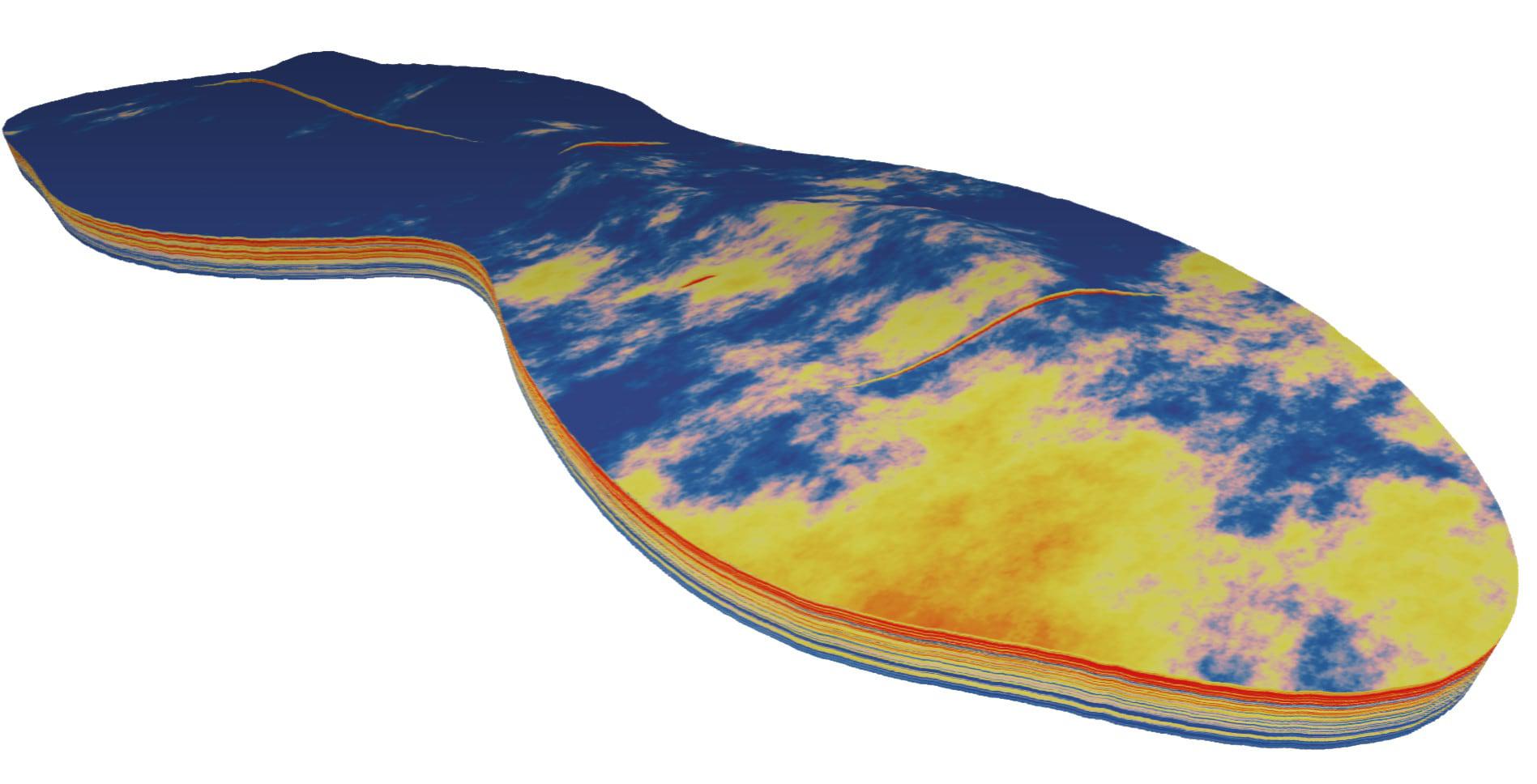

Billion-cell models are not easy to come by, as models at that scale are extremely difficult to build, simulate, and visualize. There are a few places in the world where this level of resolution and detail is beneficial but for the vast majority of simulation cases a billion cells is several orders of magnitude larger than is commonly used. At this “hero-scale” the main purpose is to stress-test simulators and workflows and frankly to show off capability. For our purposes we created a model called Bactrian using publicly available ARAB-D log data collected from a large Middle East carbonate field. With help from colleagues at iReservoir we built a three-phase system with 1.01 billion cells and 1,000 wells. Bactrian is meant to be analogous to other large-scale Middle East models. Before we could approach this monster model we had to make some code modifications such as enabling the NVLINK transport layer of the Minsky server, distributed initialization, further optimized GPU memory usage and more efficient overlap of computation and MPI communication. We tested on a series of increasingly large homogeneous box models to insure accuracy and ferret out problems that emerge at large scale. Finally, we attacked the Bactrian model. The milestone calculation simulated 45 years of production. It was completed in 92 minutes using 30 IBM OpenPower Minsky servers each containing 4 NVIDIA P100 GPUs. An image of the model is shown in Figure 1.

Figure 1: Representation of the Bactrian billion cell model showing porosity variation.

Billion cell simulations using CPU based codes have been reported in the past. Saudi Aramco has been the leader in promoting large, high resolution models driven by the responsibility of stewarding some of the largest oil reservoirs in the world. Over the past several years Aramco has published a series of impressive benchmarks using its simulator GIGAPOWERS to run a one billion cell benchmark model based on the super-giant Ghawar field. The model is a three-phase black oil system with 1.03 billion cells, 3,000 wells and 60 years of production. The first results [1] reported a runtime of about 4 days but this timing has been updated as both hardware and algorithms have advanced and the most recent publications indicate simulation times in the range of 21 hours[2]. Aramco reports running GigaPowers on 5640 cores hosted on 470 server nodes to achieve timings on this order.

Total/Schlumberger has also published excellent results using their multi-core parallel simulator INTERSECT on a billion-cell model. E. Obi et. al. [3] reported the simulation of 20 years of production data for a 1.1 billion cell compositional model with 361 wells in 10.5 hours using 576 cores on 288 servers.

The most recent result is credited to ExxonMobil. In February they announced a record calculation using the full Blue Water facility at NCSA, (716,800 cores and 22,400 server nodes) to simulate one billion cells. No information on the model or the timing or the scaling ability was released with the announcement so it’s difficult to assess on any metric other than the ability to run reservoir simulation on an enormous number of cores.

There is a debate underway in the HPC world between a "scale-out" and a "scale-up" approach. Scale-out is the idea of adding more compute components to parallelize a load. Scale-up is the idea of using fewer compute components by making each one more powerful. CPU codes generally scale out by dividing problems into large numbers of domains and optimizing to run on an equally large number of processors. The success of the Google model which emphasizes the use of huge numbers of commodity cores to run the MapReduce algorithm has influenced practitioners in this direction. GPU codes take the scale-up approach by using fewer but more powerful compute components. From an abstract and idealized perspective one may envision the scale-out approach as using 100,000 separated and intercommunicating cores to solve a problem vs. the scale-up approach of ganging them together into 100 groups of 1000 very tightly coupled cores on one chip. Intuitively it makes sense to do as much communication as possible on-chip where data transfer is vastly more efficient with lower latency, lower power consumption and higher bandwidth. This results in fewer and larger domains, less overall communication and more freedom in algorithm choice. Algorithm choice is a big deal. Math is hard to overcome with additional compute power and given the choice between modern hardware and 80’s algorithms or 80’s hardware and modern algorithms most computational scientists would choose the latter. An advantage of GPUs is that one can use both the superior algorithm and the powerful hardware. Many CPU codes for reservoir simulation that are designed to massively scale-out are forced to use weaker solver algorithms that are easier to spread over thousands of cores. Those that don’t find limitations to their scaling ability.

The purpose of our billion-cell effort was to highlight ECHELON's capabilities and the efficiencies that the GPU scale-up approach offers. There is an inherent difficulty in comparing different calculations because the models employed by Aramco, Total/Schlumberger and ExxonMobil are all different from each other and from the one that we used. Our model, built from public logs was made to approximate Ghawar and we expect it is a good proxy as such. The principle conclusion we draw from our results is that ECHELON software used an order of magnitude fewer server nodes and two orders of magnitude fewer domains to achieve runtimes that are an order of magnitude less than those reported by analogous CPU based codes. ECHELON software used 30 server nodes where Aramco used 470 and Total/Schlumberger used 288. ECHELON software divided the billion-cell problem into a modest 120 domains, where Aramco used 5,000 and Total/Schlumberger used 576. Our timing was 2.0 min per year of simulation compared to 21.5 for Aramco and 32.5 for Total/Schlumberger.

Three Things That Matter: Bandwidth, Bandwidth and Bandwidth

The secret to the gap in efficiency and performance between GPUs and CPUs for reservoir simulation is memory bandwidth and a code that can optimally exploit it. The performance of reservoir simulation, like most scientific applications is bound by memory bandwidth. More bandwidth yields more performance. The relationship is remarkably linear, in fact, as illustrated in Figure 2 reproduced from a previous blog article.

Figure 2: ECHELON performance vs. hardware memory bandwidth

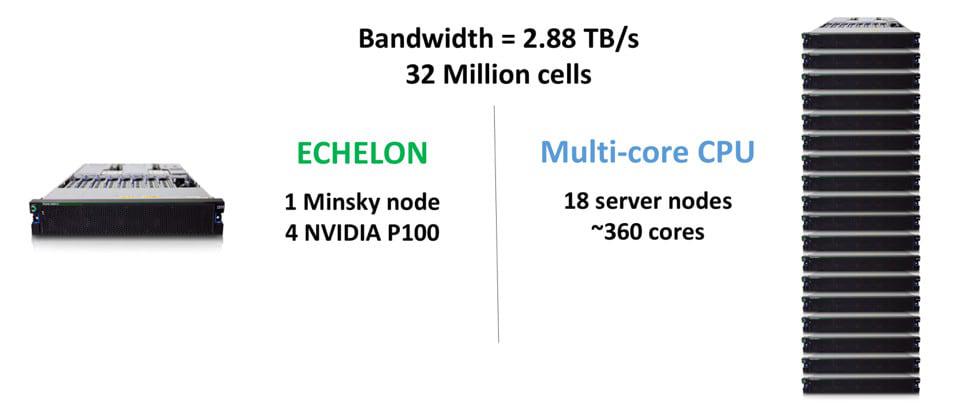

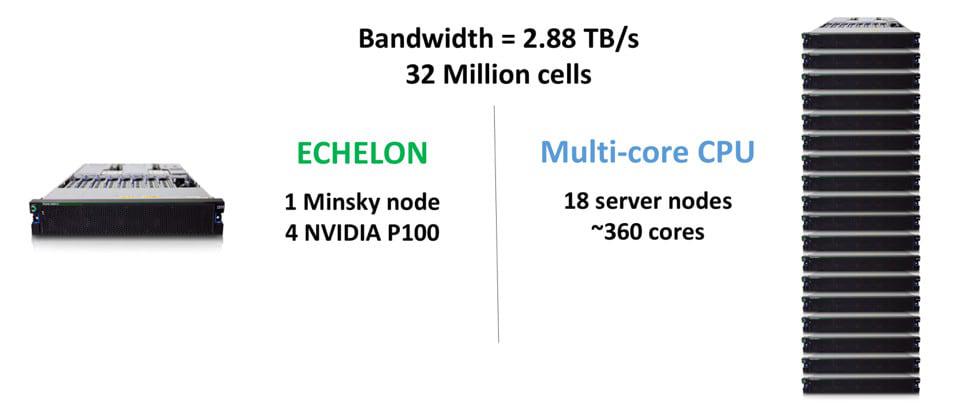

On a chip to chip comparison between the state of the art NVIDIA P100 and the state of the art Intel Xeon, the P100 deliver 9 times more memory bandwidth. Not only that, but each IBM Minsky node includes 4 P100’s to deliver a whopping 2.88 TB/s of bandwidth that can address models up to 32 million cells. By comparison two Xeon’s in a standard server node offer about 160GB/s (See Figure 3). To just match the memory bandwidth of a single IBM Minsky GPU node one would need 18 standard Intel CPU nodes. The two Xeon chips in each node would likely have at least 10 cores each and thus the system would have about 360 cores. Running a model on the 4 P100 GPUs requires a modest division of the problem into 4 domains whereas that same model on a CPU machine with equivalent bandwidth uses 360 domains with about 100x the number of data transfers. This illustrates some of the patent differences between scale-out and scale-up approaches to HPC.

Figure 3: A single IBM Minsky node delivers 2.88TB/s of memory bandwidth. 18 typical Intel Xeon nodes are needed to match that performance.

The Football Field vs. the Ping-Pong Table

Most of today's multi-core CPU codes began their development in the early or mid 2000's. This was a time when the industry mantra emphasized a coming era of "cheap cores" and codes were developed accordingly. They were designed to scale efficiently over hundreds if not thousands of cores. The recent ExxonMobil result is the nonpareil exemplar of this mode of thinking. Lacking details, it is still impressive that the calculation scaled over 716,800 cores. A large company, like ExxonMobil, has many considerations in its decision to pursue a particular approach to HPC including its investment in software and the costs associated with a platform shift. With due deference to the excellent and talented team there, however, it is my opinion that this approach of scaling out on tens of thousands of cores is precisely the wrong direction for simulation. It’s wrong from the perspective of overall performance, cost, power efficiency and resource utilization. CPU codes like those from ExxonMobil, Total/Schlumberger and Aramco must be run on so many cores because they are essentially scavenging memory bandwidth from entire clusters to achieve performance.

For most companies that do simulation the ExxonMobil approach is simply inaccessible and would likely discourage the application of HPC resources to reservoir simulation. For them GPU codes like ECHELON software make an excellent option. We have demonstrated that we can run 1 billion cells on 30 nodes. We can also run 32 million cells on a single Minsky node or 8 million cells on a single P100 card in a desktop workstation. Very large models can be run in fantastically fast times on modest hardware platforms. With elastic cloud solutions such as those available from Nimbix and now IBM any company can have access to vast computing power on modest resources to run one or thousands of model realizations. This is the advantage of GPU computing and the scale-up approach it advocates; ultra-fast, dense solutions that democratize HPC by bringing it within reach of every customer.

The Blue Water facility used by ExxonMobil is approximately the size of half a football field. The Minsky rack we used for our calculation occupied an area of about half a ping-pong table. This imagery of the football field vs. the ping-pong table is one that cleanly represents the powerful and growing advantages of GPU computing.

[1] SPE 119272 "A Next-Generation Parallel Reservoir Simulator for Giant Reservoirs", A. Dogru et. al. 2009 SPE Reservoir Simulation Symposium.

[2] SPE 142297 "New Frontiers in Large Scale Reservoir Simulation", A. Dogru et. al. 2011 SPE Reservoir Simulation Symposium.

[3] IPTC 17648 "Giga Cell Compositional Simulation", E. Obi et. al., 2014 International Petroleum Technology Conference.

Vincent Natoli

Vincent Natoli is the president and founder of Stone Ridge Technology. He is a computational physicist with 30 years experience in the field of high-performance computing. He holds Bachelors and Masters degrees from MIT, a PhD in Physics from the University of Illinois Urbana-Champaign and a Masters in Technology Management from the Wharton School at the University of Pennsylvania.